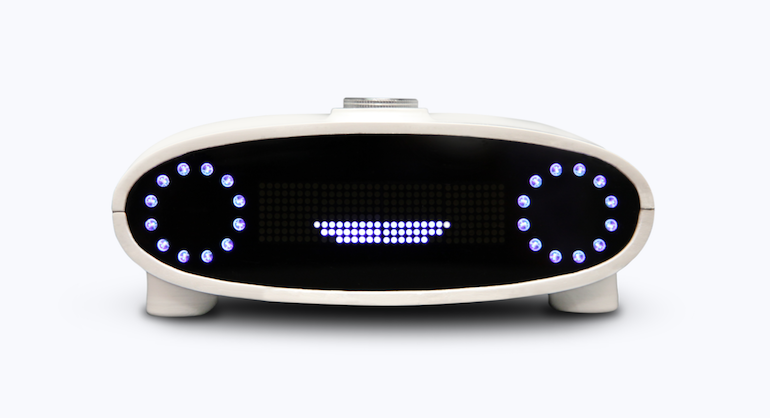

A few days with Mycroft.

Mycroft is an open-source home assistant. Kinda like Seri or Alexa only ideally not spying on you and more adaptable and programmable.

I have been playing with it for a couple of days.

Install

Installing Mycroft went better than I expected. Cloned the repo, ran the startup and there it was.

It wasn’t listening to my microphone though. Which seemed odd, mostly because I have several microphones and didn’t yet tell it which to listen to. Some of them aren’t even microphones, they just look like microphones but really are software loopbacks and the like.

So that was the first struggle: How to specify a microphone input device. Turns out it’s using PulseAudio and I can select a microphone input by using the XFCE audio mixer. Mycroft shows up in the “recording” tab and I can pick a microphone there and set the sound level.

Cool. But pretty much anything I say to it, it just says it doesn’t understand.

This kinda fixed itself without me really understanding why. Suspect it was downloading skills after startup, and probably took longer to do so than it would have done if I didn’t keep restarting it after changing something trying to figure out what was happening.

If it’s voice said “I’m downloading skills now, leave me alone for a bit”, then I missed that.

But now it’s working okay. I say “Hey Mycroft” and it beeps and then I ask for a joke and get one. I don’t even need to actually say “Hey”, which is good frankly. Just “Mycroft?” will do.

Voice

Mimic is Mycroft’s text to speech system. It’s all right and seems to run locally without having to send everything up to the servers or whatever. I hope. It has several voices and you can change which voice Mycroft uses by adding something like this to ~/.mycroft/mycroft.conf:

"tts": { "module": "mimic", "mimic": { "voice": "slt" }

The “voice” there is one of “ap slt kal awb kal16 rms awb_time” and probably more models to come. They each have faults and issues. “slt” was my favourite, for now at least.

It’s very hard to wreck a nice beach

Or indeed to recognize speech.

It’s using some external service for Speech To Text still. Probably Google. Damn.

Fixing that is the most important development goal for them as far as I’m concerned. Half the reason for not just using Google or Amazon or whoever is to avoid sending every request over the internet, let alone the audio behind every request.

No matter. Apparently Mozilla are on it, and perhaps we’ll have that soon? Mozilla get a bit of hate lately but I still reckon they’re among the best.

Desktop Control

I’d like to be able to say “Mycroft, move the mouse to the right 20 pixels” and have that work.

There’s a skill to enable that so lets get it on!

Putting the skill in ~/.mycroft/skills doens’t work. Hummm. Why are all these other skills directories here then? Yikes! Why are they empty?

Okay. We’ll put it in /opt/mycroft/skills then… Good. Works there. Sort of:

Error: No module named ‘pyautogui’

“php3 install pyautogui” and “pip install pyautogui” fail to fix the problem.

It’s installed, but apparently unavailable somehow. God damn, Python and it’s Environments. How do I make the install actually work for the environment which Python is using for Mycroft then?

There’s a script called ./dev_setup.sh which seems to do that.

Run that, THEN run “pip install” and that seems to do the trick…

Except for also Xlib and num2words. But they can be handled the same way.

“Hey, mycroft, type hello”

Hurray! Mycroft can type for me.

“Mycroft, press return” “Pressing none”

Ugh. Oh well, we’ll come back to that later…

Wake Word

Mycroft is a pretty cool name, but it’s a name for a software package, not a particular install of a software package. Surely *my* Mycroft should have it’s own name, to identify it as opposed to all the others.

Task 1: Train a new wake up word.

The wake-up system is called “Precise” and uses some kind of machine learning system based on about two seconds of audio. Probably a neural net I think.

Installing Precise seems to go okay.

Next you need to record a couple of dozen samples of you saying the wake-up phrase, using “precise-collect”.

I run that and pace around my studio carrying my RF keyboard, pressing space and saying “Yo! Willow!” from every corner and in various pitches and tones of voice.

Some of the resulting files need to go into the training directory and some into the test directory and then we run “precise-train” and it trains a model to respond to that. And we test it!

The documentation says to expect “!” or “.” to be on the screen, depending on if it hears the phrase lately or not, but all I’m getting is “X”.

A couple of hours wasted before finding that the documentation is wrong. We *expect* an “X” now, more of them on screen means a better match to the wake-up word. And the screen filled with Xs means it’s always-positive.

Which is what we expect. We have only told it what *is* it’s name, not what *isn’t*.

We need to put a whole bunch of sounds which aren’t “Yo! Willow!” into the not-wake-work directory and then train the network some more.

So I do that. Download a bunch of audio files, train them as not-wake-up-word by putting them into that directory and then doing the incremental-learn command.

But I’m still getting constant positives, even to just silence from the mic.

I’m also getting errors quite a lot:

connect(2) call to /dev/shm/jack-1000/default/jack_0 failed (err=No such file or directory) attempt to connect to server failed

Don’t really know what that means. Is it part of why I’m getting constant false positive?

Tried running Jack, which would be weird if that was the problem coz it’s definitely Pulse Audio in the core product.

Didn’t help anyway.

Not entirely sure what did help in the end. Possibly I was skipping the “-e” parameter? So not doing enough “epochs” or learning? Trying the same things over and over again a few times eventually did the trick anyway.

I have a model, which beeps when I say “Woh Wee Woh” and not when I say “MooMooMoo” at least. Close enough.

So how do I tell Mycroft to use that model instead?

First, convert that model into a different type and then copy it into ~/.mycroft/precise.

Okay. Still not working. What else?

Edit ~/.mycroft/mycroft.conf and add:

"hotwords": { "hey mycroft": { "module": "precise", "local_model_file": "/home/pre/.mycroft/precise/hey-mycroft.pb" }, "yo willow": { "module": "precise", "phonemes": "HH EY . M AY K R AO F T", "threshold": 1e-90, "local_model_file": "/home/pre/.mycroft/precise/yoWillow.pb" } },

While also ensuring to have

"listener": { "wake_word": "yo willow", "stand_up_word": "wake up" },

And… It still doesn’t work. Just no response from it at all, even if I get right up to the mic and whisper “Yo, willow” at just the right levels from as close as can be.

Nope.

Still dunno what’s going on there. It works with the pre-converted .net file and the “precise-listen” command, but won’t work when I make Mycroft try to use it.

I remain stuck here, and I don’t know why that model doesn’t work in the Mycroft environment.

No matter. A skill! I should learn how to write a skill…

Writing a skill

Skills seem to consist of a directory containing: __init__.py

The python that runs the skill. This is where your code will go.

Vobab Files They tell the system what key-phrases to listen to. Each skill may contain many of them, and each of them may have many ways to phrase the same things. The many ways to phrase go into a line each in a text file for each thing the user might say.

They live in ./vocab/en-us/UNIQUENAME.voc and the “.voc” is important. It won’t find it unless it ends in a .voc.

Dialog files Yes, apparently the missing “ue” at the end is important. Don’t forget to spell it weird.

They contain replies the system may enunciate, and again each line contains a different alternate reply. They must end in “.dialog” and again, importantly, no “ue” at the end like there ought to be.

There’s also a “test” folder and a setting.json but I’m not sure what they do.

Here’s the __init__.py for a simple skill called “precroft”:

0 from adapt.intent import IntentBuilder 1 2 from mycroft.skills.core import MycroftSkill 3 from mycroft.util.log import getLogger 4 5 __author__ = 'pre' 6 7 LOGGER = getLogger(__name__) 8 9 10 class PrecroftSkill(MycroftSkill): 11 def __init__(self): 12 super(PrecroftSkill, self).__init__(name="PrecroftSkill") 13 14 def initialize(self): 15 syscheck_intent = IntentBuilder("syscheckIntent").require("systemscheck").build() 16 self.register_intent(syscheck_intent, self.handle_syscheck_intent) 17 18 def handle_syscheck_intent(self, message): 19 self.speak_dialog("systemscheck") 20 print("PreLog: "+str(vars(message))) 21 print("PreLog: utterance was "+str(message.data['utterance'])); 22 23 def stop(self): 24 pass 25 26 27 def create_skill(): 28 return PrecroftSkill()

Where it says require(“systemscheck”), that “systemscheck” is the name of a vocab file. In theory, any of the utterances in the file ought to trigger the handler.

Where it says self.speak_dialog(“systemscheck”) that is the name of a dialog file. In theory, any of the phrases in the file could be said when the system speaks it.

Now, you might think if a phrase was in the vocab file then that means if you say that phrase into the microphone then the relevent handler will be called.

Nope.

Ugh.

You may have “how are the systems?” in your vocab file, and yet still when you say that into the mic Mycroft will reply “noun, instrumentality that combines interrelated interacting artifacts designed to work as a coherent entity” and not “The systems are fine” or some other line from the Dialog as you may expect.

Even though it works fine for other random phrases in those same files. “Systems check” always does it, say.

Adapt

“Adapt” is the name of the bit of the software which determines which handler to call depending on which phrase was said.

I can’t currently look too deeply into it’s source-code, coz it seems to have been installed as a binary or a python library or something rather than being in the core repository, but the black box “determine_intent” function doesn’t have it’s source-code on my machine right now.

An exact phrase in my skill seems to be able to fail to match because of some kind of partial phrase in another skill. And so get overridden by it. Even sometimes ending up with that skill saying “I don’t understand”. Despite the fact the exact phrase I uttered is there in my skill’s vocab file.

So not sure what’s happening there. Is “precise” a neural network? That would explain why it’s results are arbitrary, undeterminable and wrong I suppose.

I mean, and exact phrase in my vocab file, to the letter, and still it matches to some other thing instead. And nothing to say why in the logs so far.

Strange.

Open Questions

- Can I somehow tell the system my “Precroft” skill is absolutely the most important of all the skills and should never be possible to be overridden by any other, come what may?

- Or can I just swap out “adapt” for a regexp? Or otherwise improve it? It feels really weak at the moment. I mean. The *exact phrase* I say is in my vocab file but still somehow overridden. Insane.

- How do I get “Yo Willow!” to actually work as a wake-up-phrase? It works with precise-listen damnit!

- Why won’t the Desktop Skill’s “Press Return” work? Or the mouse stuff? I think I can ditch this and just write some skills to use wmctrl. If I can stop Adapt making stupid mistakes. I don’t need cross-platform or anything.

- How to turn on some debug-logging of *every internet request*, I want to see what it’s doing more than I can right now. Maybe even force it to *ask* before it looks up internet stuff. I would like it to say “I’m going to look up something about X on Wikipedia now, is that okay”, or at least be *able* to turn that mode on, maybe on a skill by skill basis.

Dunno. Obviously. This is what “open questions” means.

Currently it feels like “Precise” is pretty good, or would be if I could get the Mycroft system to work as well as the debug listener at least.

“Intent” feels arbitrary and insane. I have no idea how it’s working, and in fact it doesn’t really feel like it *is* working. I think I’d sooner swap it out for a regexp engine at this point.

The Speech-To-Text isn’t even there, it’s just Google in the background.

The text-to-speech is okay, but not a quantum leap above the other open-source systems.

Probably still don’t really want an always-on-mic in my flat. Perhaps it’d work better as a wireless lapel mic with a touch-to-speak button? It does not work well when there’s background sound in my flat certainly.

I am interested. When I understand “Adapt” more maybe I can make that work for my case.